Lunch Time Python¶

28.10.2022: PyTorch¶

PyTorch is a free and open-source machine learning framework that was originally developed by engineers at Facebook, but is now part of the Linux foundation. The two main features of PyTorch are its tensor computations framework (similar to numpy) with great support for GPU acceleration and their support for neural networks via autograd.

Press Spacebar to go to the next slide (or ? to see all navigation shortcuts)

Lunch Time Python, Scientific Software Center, Heidelberg University

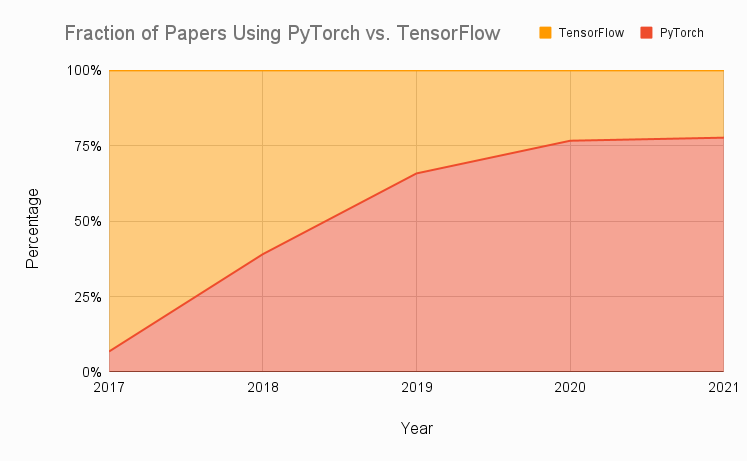

0 Why use PyTorch?¶

Source: Twitter

Source: Assembly AI

# first imports

import torch

from torch import nn # model

from torch import optim # optimizer

from torchvision import datasets, transforms # data and data transforms

from torch.utils.data import random_split, DataLoader # utilities

import numpy as np

import matplotlib.pyplot as plt

1 Tensors¶

# directly from data

data = [[1, 2], [3, 4]]

x_data = torch.tensor(data)

# from numpy array

np_array = np.array(data)

x_np = torch.from_numpy(np_array)

# from another tensor

x_ones = torch.ones_like(x_data) # retains the properties of x_data

print(f"Ones Tensor: \n {x_ones} \n")

x_rand = torch.rand_like(x_data, dtype=torch.float) # overrides the datatype of x_data

print(f"Random Tensor: \n {x_rand} \n")

Ones Tensor:

tensor([[1, 1],

[1, 1]])

Random Tensor:

tensor([[0.6606, 0.7544],

[0.3314, 0.4466]])

# use tuples to determine tensor dimensions

shape = (

2,

3,

)

rand_tensor = torch.rand(shape)

ones_tensor = torch.ones(shape)

zeros_tensor = torch.zeros(shape)

print(f"Random Tensor: \n {rand_tensor} \n")

print(f"Ones Tensor: \n {ones_tensor} \n")

print(f"Zeros Tensor: \n {zeros_tensor}")

Random Tensor:

tensor([[0.5525, 0.8326, 0.4273],

[0.4402, 0.8647, 0.3496]])

Ones Tensor:

tensor([[1., 1., 1.],

[1., 1., 1.]])

Zeros Tensor:

tensor([[0., 0., 0.],

[0., 0., 0.]])

# tensor attributes

tensor = torch.rand(3, 4)

print(f"Shape of tensor: {tensor.shape}")

print(f"Datatype of tensor: {tensor.dtype}")

print(f"Device tensor is stored on: {tensor.device}")

Shape of tensor: torch.Size([3, 4]) Datatype of tensor: torch.float32 Device tensor is stored on: cpu

# by default, tensors are created on CPU

# We move our tensor to the GPU if available

if torch.cuda.is_available():

tensor = tensor.to("cuda")

# indexing like numpy

tensor = torch.ones(4, 4)

print(f"First row: {tensor[0]}")

print(f"First column: {tensor[:, 0]}")

print(f"Last column: {tensor[..., -1]}")

tensor[:, 1] = 0

print(tensor)

First row: tensor([1., 1., 1., 1.])

First column: tensor([1., 1., 1., 1.])

Last column: tensor([1., 1., 1., 1.])

tensor([[1., 0., 1., 1.],

[1., 0., 1., 1.],

[1., 0., 1., 1.],

[1., 0., 1., 1.]])

# joining tensors

t1 = torch.cat([tensor, tensor, tensor], dim=1)

print(t1)

tensor([[1., 0., 1., 1., 1., 0., 1., 1., 1., 0., 1., 1.],

[1., 0., 1., 1., 1., 0., 1., 1., 1., 0., 1., 1.],

[1., 0., 1., 1., 1., 0., 1., 1., 1., 0., 1., 1.],

[1., 0., 1., 1., 1., 0., 1., 1., 1., 0., 1., 1.]])

# This computes the matrix multiplication between two tensors. y1, y2, y3 will have the same value

y1 = tensor @ tensor.T

y2 = tensor.matmul(tensor.T)

y3 = torch.rand_like(y1)

torch.matmul(tensor, tensor.T, out=y3)

# This computes the element-wise product. z1, z2, z3 will have the same value

z1 = tensor * tensor

z2 = tensor.mul(tensor)

z3 = torch.rand_like(tensor)

torch.mul(tensor, tensor, out=z3)

tensor([[1., 0., 1., 1.],

[1., 0., 1., 1.],

[1., 0., 1., 1.],

[1., 0., 1., 1.]])

# GPU via CUDA

# torch.randn(5).cuda()

# better (more flexible):

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

torch.randn(5).to(device)

tensor([ 1.3776, 0.9256, -0.3449, 0.6000, 0.9015])

2 Datasets and DataLoaders¶

- datasets: stores the samples and their corresponding labels

- DataLoader: wraps an iterable around the Dataset to enable easy access to the samples

# import and split data

train_data = datasets.MNIST(

"data", train=True, download=True, transform=transforms.ToTensor()

)

train, val = random_split(train_data, [55000, 5000])

train_loader = DataLoader(train, batch_size=32)

val_loader = DataLoader(val, batch_size=32)

Downloading http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

Failed to download (trying next): HTTP Error 404: Not Found Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-images-idx3-ubyte.gz to data/MNIST/raw/train-images-idx3-ubyte.gz

0%| | 0.00/9.91M [00:00<?, ?B/s]

1%|▏ | 131k/9.91M [00:00<00:08, 1.11MB/s]

5%|▌ | 524k/9.91M [00:00<00:03, 2.42MB/s]

21%|██▏ | 2.13M/9.91M [00:00<00:01, 7.56MB/s]

83%|████████▎ | 8.26M/9.91M [00:00<00:00, 25.2MB/s]

100%|██████████| 9.91M/9.91M [00:00<00:00, 20.3MB/s]

Extracting data/MNIST/raw/train-images-idx3-ubyte.gz to data/MNIST/raw Downloading http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

Failed to download (trying next): HTTP Error 404: Not Found Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/train-labels-idx1-ubyte.gz to data/MNIST/raw/train-labels-idx1-ubyte.gz

0%| | 0.00/28.9k [00:00<?, ?B/s]

100%|██████████| 28.9k/28.9k [00:00<00:00, 514kB/s]

Extracting data/MNIST/raw/train-labels-idx1-ubyte.gz to data/MNIST/raw Downloading http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

Failed to download (trying next): HTTP Error 404: Not Found Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-images-idx3-ubyte.gz to data/MNIST/raw/t10k-images-idx3-ubyte.gz

0%| | 0.00/1.65M [00:00<?, ?B/s]

4%|▍ | 65.5k/1.65M [00:00<00:02, 570kB/s]

16%|█▌ | 262k/1.65M [00:00<00:01, 1.23MB/s]

68%|██████▊ | 1.11M/1.65M [00:00<00:00, 4.03MB/s]

100%|██████████| 1.65M/1.65M [00:00<00:00, 4.69MB/s]

Extracting data/MNIST/raw/t10k-images-idx3-ubyte.gz to data/MNIST/raw Downloading http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz Failed to download (trying next): HTTP Error 404: Not Found Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz

Downloading https://ossci-datasets.s3.amazonaws.com/mnist/t10k-labels-idx1-ubyte.gz to data/MNIST/raw/t10k-labels-idx1-ubyte.gz

0%| | 0.00/4.54k [00:00<?, ?B/s]

100%|██████████| 4.54k/4.54k [00:00<00:00, 3.05MB/s]

Extracting data/MNIST/raw/t10k-labels-idx1-ubyte.gz to data/MNIST/raw

figure = plt.figure(figsize=(8, 8))

cols, rows = 3, 3

for i in range(1, cols * rows + 1):

sample_idx = torch.randint(len(train_data), size=(1,)).item()

img, label = train_data[sample_idx]

figure.add_subplot(rows, cols, i)

plt.axis("off")

plt.imshow(img.squeeze(), cmap="gray")

plt.show()

train_features, train_labels = next(iter(train_loader))

print(f"Feature batch shape: {train_features.size()}")

print(f"Labels batch shape: {train_labels.size()}")

img = train_features[0].squeeze()

label = train_labels[0]

plt.imshow(img, cmap="gray")

plt.show()

print(f"Label: {label}")

Feature batch shape: torch.Size([32, 1, 28, 28]) Labels batch shape: torch.Size([32])

Label: 0

3 Coding a neural network¶

# in theory easy via stateless approach

# import torch.nn.functional as F

# loss_func = F.cross_entropy

# def model(xb):

# return xb @ weights + bias

# print(loss_func(model(xb), yb), accuracy(model(xb), yb))

# gets messy quickly!

# define model via explicit nn.Module class

class MyModel(nn.Module):

def __init__(self):

super().__init__()

self.l1 = nn.Linear(28 * 28, 64)

self.l2 = nn.Linear(64, 64)

self.l3 = nn.Linear(64, 10)

self.do = nn.Dropout(0.1)

def forward(self, x):

h1 = nn.functional.relu(self.l1(x))

h2 = nn.functional.relu(self.l2(h1))

do = self.do(h2 + h1) # residual connection

logits = self.l3(do)

return logits

nn.Sequential is an ordered container of modules; good for easy and quick networks. No need to specify forward method!

# defining model via sequential

# shorthand, no need for forward method

model_seq = nn.Sequential(

nn.Linear(28 * 28, 64),

nn.ReLU(),

nn.Linear(64, 64),

nn.ReLU(),

nn.Dropout(0.1), # often helps with overfitting

nn.Linear(64, 10),

)

# move model to GPU/device memory

model = model_seq.to(device)

Many layers inside a neural network are parameterized, i.e. have associated weights and biases that are optimized during training. Subclassing nn.Module automatically tracks all fields defined inside your model object, and makes all parameters accessible using your model’s parameters() or named_parameters() methods.

print(f"Model structure: {model}\n\n")

for name, param in model.named_parameters():

print(f"Layer: {name} | Size: {param.size()} | Values : {param[:2]} \n")

Model structure: Sequential(

(0): Linear(in_features=784, out_features=64, bias=True)

(1): ReLU()

(2): Linear(in_features=64, out_features=64, bias=True)

(3): ReLU()

(4): Dropout(p=0.1, inplace=False)

(5): Linear(in_features=64, out_features=10, bias=True)

)

Layer: 0.weight | Size: torch.Size([64, 784]) | Values : tensor([[-0.0138, -0.0307, 0.0052, ..., -0.0161, 0.0235, 0.0294],

[ 0.0222, -0.0078, -0.0171, ..., 0.0313, -0.0147, 0.0150]],

grad_fn=<SliceBackward0>)

Layer: 0.bias | Size: torch.Size([64]) | Values : tensor([0.0278, 0.0258], grad_fn=<SliceBackward0>)

Layer: 2.weight | Size: torch.Size([64, 64]) | Values : tensor([[ 0.0702, -0.0552, -0.0902, 0.0308, 0.0158, -0.0124, 0.0553, -0.0614,

-0.0612, -0.0754, 0.0259, -0.0574, 0.0420, 0.0197, -0.0280, 0.0351,

-0.0497, 0.1071, 0.1018, 0.0274, -0.0734, -0.0798, -0.1233, 0.0324,

-0.0010, 0.0736, 0.0217, 0.1221, -0.0228, -0.0246, 0.1167, -0.0104,

0.0552, 0.0822, -0.1056, -0.0825, 0.0340, 0.1136, 0.1118, -0.1049,

0.1125, -0.0147, -0.0932, 0.1019, -0.0782, -0.0979, 0.0715, -0.1183,

0.0686, -0.0288, 0.0262, 0.0342, 0.0954, -0.0761, -0.0746, -0.1248,

-0.1083, 0.0763, -0.1062, 0.0856, -0.0954, 0.0603, 0.0427, -0.1153],

[ 0.0531, -0.0222, 0.0409, 0.0083, -0.0872, 0.0664, -0.0883, 0.0391,

0.1189, -0.0871, 0.0465, -0.1207, 0.0331, -0.0938, -0.0729, 0.1006,

-0.0851, -0.0550, 0.0421, 0.0972, 0.1185, 0.0742, -0.0961, -0.0417,

-0.0535, 0.1018, 0.0067, -0.0951, 0.0695, -0.0600, 0.0212, -0.0610,

0.1227, -0.0690, 0.1213, 0.0954, 0.0982, -0.0257, 0.0309, 0.0408,

0.0786, -0.0048, -0.0340, -0.1091, 0.0766, -0.0323, -0.0093, -0.1147,

-0.0050, 0.0097, 0.0960, -0.0252, -0.0400, -0.1035, 0.1055, 0.0910,

-0.0683, 0.0091, 0.0160, -0.0401, 0.0950, 0.0551, 0.0471, -0.0976]],

grad_fn=<SliceBackward0>)

Layer: 2.bias | Size: torch.Size([64]) | Values : tensor([-0.0391, 0.0673], grad_fn=<SliceBackward0>)

Layer: 5.weight | Size: torch.Size([10, 64]) | Values : tensor([[-0.0908, 0.1124, -0.0261, -0.0974, -0.0857, 0.0721, 0.0765, -0.0591,

0.1101, 0.0025, 0.0267, 0.0083, -0.0115, -0.0625, -0.0660, -0.0006,

0.1047, 0.0254, 0.0871, -0.1016, -0.0159, 0.0207, 0.1123, 0.1024,

0.0709, 0.0928, -0.0967, 0.0248, 0.0637, 0.0816, 0.0021, 0.0510,

-0.0285, -0.1121, -0.0496, 0.0564, 0.0486, -0.0803, 0.1097, -0.0845,

-0.0637, -0.0584, -0.0225, 0.0663, 0.0634, 0.0408, 0.1117, 0.0571,

0.0160, -0.0979, 0.0728, 0.0611, -0.0142, 0.1052, -0.0142, 0.0506,

-0.0074, 0.1168, 0.0648, 0.1244, 0.0374, 0.1115, 0.0059, -0.0600],

[ 0.1246, -0.0531, 0.1165, 0.1042, 0.0709, -0.0760, -0.0585, 0.1224,

-0.0442, -0.0472, 0.0871, -0.0140, -0.0462, 0.0818, -0.0353, -0.0669,

0.0159, -0.0266, 0.0032, 0.0171, 0.0656, -0.0636, -0.0648, -0.0861,

0.0069, -0.0836, 0.1227, -0.0776, -0.0868, 0.0122, 0.0649, 0.0186,

-0.1142, 0.1189, 0.0200, 0.0095, -0.0982, -0.1095, -0.1245, -0.0802,

-0.0266, 0.0534, 0.0022, -0.0761, 0.1110, -0.0714, -0.1234, -0.0630,

-0.0733, -0.1115, -0.1128, -0.1136, -0.1114, 0.0320, -0.0758, -0.0304,

0.0491, 0.1185, -0.1162, 0.0813, 0.0411, -0.0222, 0.0193, -0.0442]],

grad_fn=<SliceBackward0>)

Layer: 5.bias | Size: torch.Size([10]) | Values : tensor([-0.0526, -0.0249], grad_fn=<SliceBackward0>)

# define loss function

loss = nn.CrossEntropyLoss() # softmax + neg. log

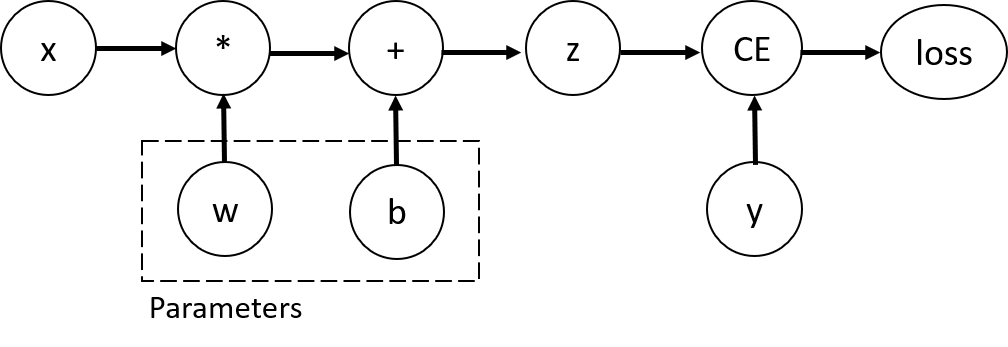

4 Backpropagation via Autograd¶

In a forward pass, autograd does two things simultaneously:

run the requested operation to compute a resulting tensor

maintain the operation’s gradient function in the DAG.

The backward pass kicks off when .backward() is called on the DAG root. autograd then:

computes the gradients from each .grad_fn,

accumulates them in the respective tensor’s .grad attribute

using the chain rule, propagates all the way to the leaf tensors.

5 Optimization of model parameters (training)¶

We define the following hyperparameters for training:

- Number of Epochs - the number times to iterate over the dataset

- Batch Size - the number of data samples propagated through the network before the parameters are updated (defined in train_Loader)

- Learning Rate - how much to update models parameters at each batch/epoch. Smaller values yield slow learning speed, while large values may result in unpredictable behavior during training.

lr = 1e-2

epochs = 5

# defining optimizer

params = model.parameters()

optimiser = optim.SGD(params, lr=1e-2)

Inside the training loop, optimization happens in three steps:

- Call optimizer.zero_grad() to reset the gradients of model parameters. Gradients by default add up; to prevent double-counting, we explicitly zero them at each iteration.

- Backpropagate the prediction loss with a call to loss.backward(). PyTorch deposits the gradients of the loss w.r.t. each parameter.

- Once we have our gradients, we call optimizer.step() to adjust the parameters by the gradients collected in the backward pass.

# define training and validation loop

# training loop

for epoch in range(epochs):

losses = list()

accuracies = list()

model.train() # enables dropout/batchnorm

for batch in train_loader:

x, y = batch

batch_size = x.size(0)

# x: b x 1 x 28 x 28

x = x.view(batch_size, -1).to(device)

# 5 steps to train network

# 1 forward

l = model(x) # l: logits

# 2 compute objective function

J = loss(l, y.to(device))

# 3 cleaning the gradients (could also call this on optimiser)

model.zero_grad()

# optimizer.zero_grad() is equivalent

# manually: params.grad._zero()

# 4 accumulate the partial derivatives of J wrt params

J.backward()

# manually: params.grad.add_(dJ/dparams)

# 5 step in the opposite direction of the gradient

optimiser.step()

# could have done manual gradient update:

# with torch.no_grad():

# params = params - lr * params.grad

losses.append(J.item())

accuracies.append(y.eq(l.detach().argmax(dim=1).cpu()).float().mean())

print(f"epoch {epoch + 1}", end=", ")

print(f"training loss: {torch.tensor(losses).mean():.2f}", end=", ")

print(

f"training accuracy: {torch.tensor(accuracies).mean():.2f}"

) # print two decimals

# validation loop

losses = list()

accuracies = list()

model.eval() # disables dropout/batchnorm

for batch in val_loader:

x, y = batch

batch_size = x.size(0)

# x: b x 1 x 28 x 28

x = x.view(batch_size, -1).to(device)

# 5 steps to train network

# 1 forward

with torch.no_grad(): # more efficient, just tensor no graph connected

l = model(x) # l: logits

# 2 compute objective function

J = loss(l, y.to(device))

losses.append(J.item())

accuracies.append(y.eq(l.detach().argmax(dim=1).cpu()).float().mean())

print(f"epoch {epoch + 1}", end=", ")

print(f"validation loss: {torch.tensor(losses).mean():.2f}", end=", ")

print(

f"validation accuracy: {torch.tensor(accuracies).mean():.2f}"

) # print two decimals

epoch 1, training loss: 1.26, training accuracy: 0.63

epoch 1, validation loss: 0.51, validation accuracy: 0.86

epoch 2, training loss: 0.45, training accuracy: 0.87

epoch 2, validation loss: 0.37, validation accuracy: 0.90

epoch 3, training loss: 0.37, training accuracy: 0.89

epoch 3, validation loss: 0.32, validation accuracy: 0.91

epoch 4, training loss: 0.32, training accuracy: 0.91

epoch 4, validation loss: 0.29, validation accuracy: 0.92

epoch 5, training loss: 0.29, training accuracy: 0.92

epoch 5, validation loss: 0.26, validation accuracy: 0.92

6 Store models¶

# just save model weights without structure

torch.save(model.state_dict(), "model_weights.pth")

model.load_state_dict(torch.load("model_weights.pth"))

model.eval()

/tmp/ipykernel_2274/2099193612.py:3: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

model.load_state_dict(torch.load("model_weights.pth"))

Sequential( (0): Linear(in_features=784, out_features=64, bias=True) (1): ReLU() (2): Linear(in_features=64, out_features=64, bias=True) (3): ReLU() (4): Dropout(p=0.1, inplace=False) (5): Linear(in_features=64, out_features=10, bias=True) )

# save whole model

torch.save(model, "model.pth")

new_model = torch.load("model.pth")

/tmp/ipykernel_2274/2474383404.py:3: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

new_model = torch.load("model.pth")